I Spent 8 Hours Writing Quicksort On Leetcode

Published on

"The quieter you become, the more you are able to hear"

- Backtrack/Kali Linux Wallpaper

Ok I already lied, the quote is actually from some guy I've never heard of before named Ram Dass, but how many of you actually knew that? I didn't. But let's move on.

For those who may not be aware, Leetcode is a website focused around solving programming problems that involve data structures and algorithms (DSA), and often times have time complexity or space complexity requirements (O(1), O(log(n)), O(n), etc). Leetcode is also often used by employers to judge competency for high paying programming jobs.

Now let's see what programmers say about Leetcode:

"I solved X questions on Leetcode and it was a waste of time"

- Programmer who solved X questions on Leetcode"Learning DSA won't make you a better programmer"

- Programmer who isn't good at DSA"I HATE LEETCODE!!!!!!!!!!!!!!!!!"

- Almost every programmer

Or my personal favorite:

"Interviewers don't even ask you stuff like sorting algorithms in an interview, so why are you spending 8 hours writing quick sort? Dude just use the language's built in sort function"

- Programmers watching me skill issue in Python while grinding Leetcode

This rant isn't to put any of these people on blast, but to explain why this well-intentioned advice is harmful, and to explain the value of skill issuing for hours on Leetcode. There is so much more to Leetcode than grinding for a couple of weeks and then getting that high paying software engineering job at X popular company. Wait shit X is a real company now. Uh, Y popular company.

You Spent 8 Hours Writing Quicksort?

Yes I did, and I'm still not done. "That's such a waste of time, you're an idiot."

Let me explain why it's not a waste of time.

I was doing random Leetcode questions, and I got Leetcode Question 3075. Maximize Happiness of Selected Children. If you want to give it a try, go ahead now before I get into how to solve it.

This page was intentionally left blank.

This page was also intentionally left blank.

Ok that's enough. If you get spoiled now it's your own damn fault.

The obvious solution is to sort the array in descending order, then sum the first k items from the array subtracting the index from each value as you add it to the sum. Why is this a Leetcode medium? This is pretty easy. Well, since Leetcode is all about DSA, I assume you're supposed to write your own sorting algorithm rather than use the language's built in sort, so that's exactly what I did.

I dabbled in DSA briefly a few months ago, and unfortunately much of it didn't stick. But I did remember that merge sort was a cool sorting algorithm. I take a look at the Wikipedia article to remember how it works, and... It looks a bit intense. I remember there's an easier sorting algorithm, what's that sorting algorithm that The Primeagen likes... It's really easy to implement... It's fast... It's something with a pivot... QUICKSORT!

Ok let me look up the Wikipedia article on quicksort and... Ok it's a divide and conquer strategy. I've learned this before and I've written it before, but the suggested implementation looks completely foreign to me. Surely an example of a simple implementation has to be out there somewhere. Even though it's not a good resource, let me try Geeks for Geeks. Hmm, this implementation doesn't look familiar either... At this point, a great hero took pity on me and showed me a quick and dirty implementation of quicksort to demonstrate how it works, something along the lines of:

function quicksort(arr){if(arr.length < 2){return arr;}let pivot = random_array_element(arr);let start = [];let middle = [];let end = [];for(let i = 0; i < arr.length; i++){if(arr[i] < pivot){start[] = arr[i];}}for(let i = 0; i < arr.length; i++){if(arr[i] == pivot){middle[] = arr[i];}}for(let i = 0; i < arr.length; i++){if(arr[i] > pivot){end[] = arr[i];}}return quicksort(start) + middle + quicksort(end);}

This still doesn't look familiar to me at all, but the hero of this story slammed out 3 Leetcode mediums in 20 minutes while I was staring blankly at Geeks for Geeks, so I'm pretty confident he knows how quicksort works. Looking back now, I was confusing the implementation of quicksort with binary search, and I was trying to find an algorithm that didn't exist. Thanks me.

Anyway. this implementation is obviously quite inefficient, but it's a great demonstration of the concept. You put all of the stuff less than the pivot on the left, all of the stuff equal to the pivot in the middle, and all of the stuff greater than the pivot on the right, and then recur the algorithm on the left and right sides of the pivot values.

The reason this implementation is inefficient is because we're declaring a bunch of new arrays and copying data all over the place. I'm a C programmer at heart. Copying memory hurts my soul, so let's figure out an alternative implementation that modifies the array in-place. I remember that some sorting algorithms like bubble sort work by swapping elements around the array in-place, so surely other sorting algorithms can be implemented in the same way. After skill issuing for a few more hours, I remembered that Primeagen has a Free DSA Course (just make an account on the website, not sponsored btw).

After reviewing Prime's approach and changing it to work iteratively, I came up with the following implementation:

let arr = [/* random numbers */];let stack = [[0, arr.length - 1]];while(stack.length > 0){// This is called destructuring btwlet low, high = arr.shift();let pivot = arr[high];let swap_index = low - 1;for(let i = low; i <= high; i++){if(arr[i] <= pivot){swap_index++;swap(arr[swap_index], arr[i]);}}if(swap_index - 1 > low){stack[] = [low, swap_index - 1];}if(high > swap_index + 1){stack[] = [swap_index + 1, high];}}

Take a minute to read through this and understand how it works. This approach should be pretty performant despite my Python skill issues. Maybe numpy would make it fast. I don't know. I don't like Python so I don't care. Sorry, not sorry. After skill issuing for a bit, I clicked the submit button and hoped that I'd finally get a solve on this problem after 8 hours.

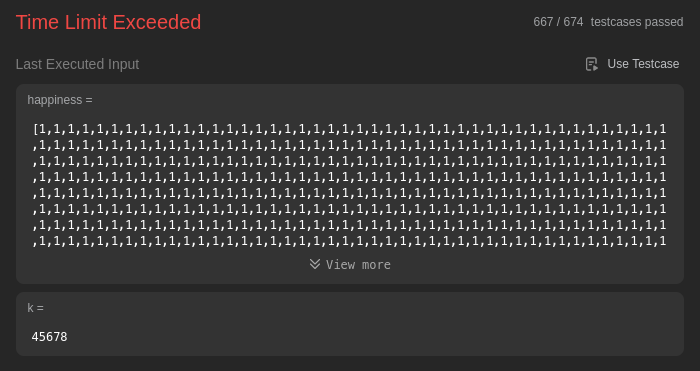

I'm skill issued with Python, and Python is very slow, but this shouldn't be timing out with a sorting algorithm that runs in O(n log(n)). What's going on?

Well, remember that quicksort is O(n log(n)) only in the average case. In the worst case, quicksort is O(n^2). After doing a little bit of testing, I'm pretty confident that my implementation is running closer to O(n^2) instead of O(n log(n)).

The hero suggested that I add an optimization by grouping all of the elements equal to the pivot into a "middle" partition so that all elements equal to the pivot are handled in one step, but after thinking on this for a while I decided that there was more to the story that I wanted to investigate.

Understanding The Implementation's Time Complexity

The way quicksort works is by grabbing an arbitrary pivot, and then you partition everything smaller than the pivot on the left and everything larger than the pivot on the right, then you quicksort the left and right partitions repeatedly until the array becomes sorted. This is obviously a divide and conquer approach.

But what if quicksort didn't divide the array? What scenarios would cause this to happen? Well, the pivot gets removed from the input array every recursion step, but quicksort would still go through the n length array (minus 1 for every step) n times, which is O(n^2). Thinking about it, this is exactly what's happening with my implementation when the entire array is 1, but what other scenarios would cause this behavior?

Well, my implementation picks the last element as the pivot every time. If every number to the left of the pivot is already smaller than the pivot, then the pivot is removed and the remaining array is passed to the next iteration of the loop as the left partition. In the next iteration of the loop, the implementation picks the last element as the pivot. If every number to the left of the pivot is again smaller than the pivot... Wait... The array is already sorted! Logically, this would also happen if the array was sorted in reverse.

Applying this logic in the opposite direction, what's the best case scenario? Well, if the worst case scenario is having everything in only one partition and the other partition is completely empty, then the best case scenario should be both partitions hold (as close as possible to) half of the remaining elements. The first iteration would go over n elements from the array, the second iteration would go over ((n - 1) / 2) twice, the third iteration would go over ((n - 3) / 4) four times... so on until the last iteration i, which goes over ((n - (n/2)) / 2^i) elements in the array 2^i times.

In the above equation for calculating the number of remaining elements in the array at each recursion step, the n/2 and 2^i represent constants, and time complexity doesn't care about constants, so we remove them. That leaves us with n, but we use n a total of 2^i times. We know that 2^i has to be as close as possible to n because you can't divide an array of n elements by more than n, so the i in 2^i has to be log(n).

Iterating over n elements log(n) times is n log(n). We know that the best case for quicksort is O(n log(n)), so this makes sense.

Finally, now that we know the best and worst case scenarios, what does the average case look like? For my implementation, it depends on how sorted the input array is. The more sorted the input array is, the closer the time complexity will be to n^2. The more unsorted the input array is, the closer the time complexity will be to n log(n). Assuming the input array is completely random, how likely is it that the array will be sorted? Well, it depends. An array that is 100% duplicates will always be sorted because an array full of the same number can't not be sorted. An array that has no duplicates has a 1 in n! (factorial) chance of being already sorted. At the numbers where n^2 matters, n! is astronomically unlikely.

So to summarize, the best case for qucksort is n log(n), the worst case is n^2, and the average case depends on how well the implementation chooses a pivot that divides the array. For my specific implementation, arrays that are closer to sorted will be closer to the worst case n^2, and duplicates increase the "already sorted"ness of the input array. The less sorted the input array is, the closer my implementation runs to the best case n log(n).

Ok, But What's The Point Of This?

The point is that before I spent 8 hours writing quicksort, I didn't know about any of this. Some of these insights might seem obvious, but they have massive implications that would be very easy to overlook in real world scenarios. To name a few:

- The implementation of Quicksort I used is actually perfectly fine when the input array is unlikely to be sorted.

- If you have information about how your input data may arranged, quicksort will run close to best case if you can consistently choose a pivot that will divide the array.

- If your quicksort implementation chooses a pivot at random, it should run reasonably close to best case the overwhelming majority of the time.

- Divide and conquer strategies in general require good dividing, so any divide and conquer strategy with a dynamic way to partition data needs to be very smart about how the input is divided.

- Divide and conquer algorithms work well with multithreading (read this rant), so quicksort is a potential choice for multithreaded environments.

If I didn't spend 8 hours writing quicksort in Python for this Leetcode problem, I wouldn't have figured all of this out.

But Why Did You Need To Figure All Of This Out?

Well, there are many reasons. The first and foremost reason is that I did something called learning, and learning doesn't seem popular among software developers for some reason. There is a lot of money in the industry, and that's not a bad thing at all, but if we want to call ourselves software engineers we need to prioritize engineering in our decision making. Do you know what happens to real engineers who prioritize money and laziness over engineering and learning? They fucking die, and innocent people can die too.

Leetcode is a great way to learn DSA in an environment where failure is meaningless. You have thousands of problems with many suggestions for multiple potential solutions, and you're given hundreds or even thousands of test cases to test your solution against. Grinding these is also just a great way to keep your skills sharp, and maybe even learn something new.

Do you remember the quote from the start of this article, and how I lied about the origin of the quote? The truth deserves to be known, and although I knew nothing about Ram Dass before writing this article, he deserves to be treated with respect and accurately credited for his work. The truth always deserves respect. It's no secret that programming sucks so hard for so many reasons, so let's make things suck a little bit less by following at least the most basic technical standard: Objective Truth. Start grinding, and become based my friends.

Happy coding,

AOP