Can C++ Atomics Deadlock?

Published on

Did you know that JavaScript has atomics? Did you know that JS atomics include a function to asynchronously wait for an atomic operation to finish? If you're like me, you just took 2d6 psychic damage. Sorry (not sorry).

Ok, but that's JS, and this rant is about C++. Surely C++ is... Oh god what is this? What is happening?

Before we get into it, let's explore what an atomic operation even is and understand it the correct way.

What Are Atomics?

Atomic operations are a hardware feature that guarantees a CPU instruction will not be interrupted by anything. In x86_64, this is done by adding a "lock prefix" to certain instructions. Many CPU architectures do not support any atomic operations, but x86_64 is one of the few that actually require atomic operations as a part of the core specification, and it's one of the reasons I actually like x86_64. I believe some other CPU architectures such as ARM have atomics, but I can't say for certain because I don't know everything. Not yet.

According to the AMD64 Manual Volume 3 Page 11, "The prefix is intended to give the processor exclusive use of shared memory in a multiprocessor system."

(in this context, "processor" means CPU core). This might sound weird, and that's because it is weird. An atomic is actually a lock on a single piece of data for a single CPU instruction. It's still a lock, but you should think of it as an atomic lock, a lock that is held atomically, a lock that is held for the smallest amount of time possible.

Atomic locks are acquired and released at the hardware level in 1 CPU cycle. If 2 threads try to lock the same data on the same CPU cycle, one thread will succeed and the other thread will fail.

Why use Atomics?

Let's say there are 2 threads and 2 mutexes.

Thread 1 acquires a lock on mutex 1, and thread 2 acquires a lock on mutex 2.

Thread 1 doesn't release the lock on mutex 1 and moves on to acquire the lock on mutex 2. Mutex 2 is already locked, so thread 1 sits and waits for the lock on mutex 2 to be released.

Thread 2 doesn't release the lock on mutex 2 and moves on to acquire the lock on mutex 1. Mutex 1 is already locked, so thread 2 sits and waits for the lock on mutex 1 to be released.

Thread 1 is waiting on thread 2, and thread 2 is waiting on thread 1, and now your program froze because nothing can make progress. This is called a deadlock.

Atomics use a locking mechanism, however, no thread can hold a lock for longer than a single CPU cycle, so it's impossible for threads to make other threads wait. This means that deadlocking is impossible, and allows for something called Lock-Free Algorithms! Note that lock-free algorithms aren't necessarily fast, lock-free algorithms just guarantee that your code won't deadlock (i.e. "there is guaranteed system-wide progress"

).

Finally, C++ Atomics

Alright, now that we've gone over what atomics are, we can get onto C++ atomics. If an atomic lock only lasts for a single CPU instruction, then why is there a function for waiting on an atomic in C++? If we look at the is_lock_free function, we can see that "All atomic types ... may be implemented using mutexes or other locking operations, rather than using the lock-free atomic CPU instructions."

And the truth becomes a little more clear.

C++ is a programming language that has to work on a lot of hardware, and a lot of hardware does not have atomics. From the perspective of designing an atomics API in C++, this strange decision suddenly makes a lot of sense. You need to provide a general interface for atomic operations that's consistent between many different kinds of hardware architectures, regardless of what the underlying hardware actually supports. If you don't make a generalized interface, you're going to need a bunch of hardware-specific APIs, and we already know that C++ is complicated enough.

I will refer to these fallback mutex-based atomics as software atomics. You can test whether or not a datatype T can be implemented using hardware atomics in C++ by using std::atomic<T>{}.is_lock_free()std::atomic<T>{}.is_always_lock_free()

Can C++ Atomics Deadlock?

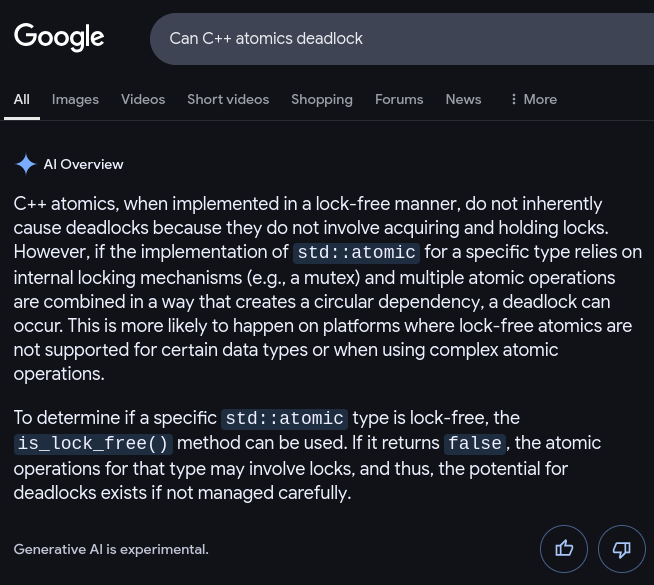

Now if you're like me, the first thing you think when hearing the word mutex is "slow", and then the second thing you think is "deadlock". Since C++ atomics can be implemented using mutexes, does this mean C++ atomics can deadlock? I tried googling this, but I was unable to find a definitive answer. In my desperation, I looked at what Google's AI Gemini said in my search query:

On its face, this seems like a reasonable take. This was my initial thought, and many others have the same reaction when first hearing that C++ atomics can be backed by mutexes. After asking certain variations of the question and refreshing a few times, the AI generated a few examples demonstrating different ways to deadlock C++ atomics. I don't know that the ideas will work, but I haven't had to think too hard yet and I'm eager to get started, so let's do this and see what happens.

Testing Session #1

According to the AI, the following should cause a deadlock:

- Initialize variables

xandyto 1. - Thread 1 will change variable

xto 0, and then wait for thread 2 to change variableyto 0, and then exit. - Thread 2 will change variable

yto 0, and then wait for thread 1 to change variablexto 0, and then exit.

Sounds easy enough. I implemented my first test using Gemini's idea and hardware atomics, and it works as expected. The test verifies that the hardware will use atomic operations for uint64_t, and we do not observe a deadlock after running the program a few times.

Next, I implemented my second test, using Gemini's idea and software atomics. I forced software atomics by using a 24-byte struct that x86_64 CPUs can not handle atomically. The test first verifies that the hardware will not use atomic operations for the 24-byte struct, and when running the program we also do not observe any deadlocks.

Well, I guess Gemini was wrong. What else is new? Generative AI tells you what you want to hear, even if it has to make things up or lie to you. I knew this, but figured I'd give the AI a chance. Rip bozo.

Anyway, it seems that we're going to have to try a bit harder if we want to actually get a deadlock here. Time to turn on my brain and start thinking.

Looking For Ideas

After spending some time thinking about this, nothing particularly helpful came to mind. However, there is one great source of inspiration that we have yet to explore: The disassembly.

Let's start by looking at the disassembly of the hardware atomic version:

$ objdump -d gemini-hardware-atomic | less0000000000001120 <main>:...118e: 48 8d 35 eb 01 00 00 lea 0x20b(%rip),%rsi # 1380 <_Z17thread_function_1v>...119d: 48 8d 35 6c 01 00 00 lea 0x16c(%rip),%rsi # 1310 <_Z17thread_function_2v>

We can see that the main function is using function pointers to _Z17thread_function_1v and _Z17thread_function_2v. If you're not familiar, C++ uses something called Name Mangling to solve a variety of problems, so the names in the disassembly are a little bit weird. However, we can clearly see thread_function_1 and thread_function_2 in these mangled names.

0000000000001310 <_Z17thread_function_2v>:...133b: 48 c7 05 ca 2c 00 00 movq $0x0,0x2cca(%rip) # 4010 <y>1342: 00 00 00 00...1350: 48 8b 05 c1 2c 00 00 mov 0x2cc1(%rip),%rax # 4018 <x>1357: 48 83 f8 01 cmp $0x1,%rax135b: 74 f3 je 1350 <_Z17thread_function_2v+0x40>0000000000001380 <_Z17thread_function_1v>:13ab: 48 c7 05 62 2c 00 00 movq $0x0,0x2c62(%rip) # 4018 <x>13b2: 00 00 00 00...13c0: 48 8b 05 49 2c 00 00 mov 0x2c49(%rip),%rax # 4010 <y>13c7: 48 83 f8 01 cmp $0x1,%rax13cb: 74 f3 je 13c0 <_Z17thread_function_1v+0x40>

This is quite interesting. We can see that the disassembly simply uses mov instructions to move data around. If you've been paying attention, you might have noticed that mov is not one of the instructions that work with the lock prefix, but that's actually not a big deal. Instead of mov, you can use the xchg instruction to "exchange" the current shared data with the value you want to atomically store. Since this "exchanges" values, this also works as an atomic load. In this case, the C++ compiler must have realized that there was no real race condition, so it did things the easy way.

Now what about the test that uses software atomics?

The disassembly is way too long and complex for me to post and explain, but the disassembly shows pretty clearly that there is a lot of shifting of data between CPU registers before calling __atomic_load@plt and __atomic_compare_exchange@plt. It also does some stuff with %xmm0. Perhaps the GNU C++ Library optimizes software atomics that are able to fit into extended registers. We'll take a closer look at these functions later.

Testing Session #2

Now I have some more questions. What if we use RCU on the test that should use hardware atomics? Running my 3rd test, everything works as expected. No deadlocks. Next, let's take a look at the disassembly.

0000000000001310 <_Z17thread_function_2v>:132c: 31 d2 xor %edx,%edx132e: 48 8b 05 db 2c 00 00 mov 0x2cdb(%rip),%rax # 4010 <y>1335: f0 48 0f b1 15 d2 2c lock cmpxchg %rdx,0x2cd2(%rip) # 4010 <y>133c: 00 00133e: 75 ee jne 132e <_Z17thread_function_2v+0x1e>...1360: 48 8b 05 b1 2c 00 00 mov 0x2cb1(%rip),%rax # 4018 <x>1367: 48 83 f8 01 cmp $0x1,%rax136b: 74 f3 je 1360 <_Z17thread_function_2v+0x50>

Alright, now it looks like we're getting somewhere. lock cmpxchgx to become 0 is still done without the lock prefix. The compiler probably recognizes once again that it's fine to not use an atomic operation to read a value from a variable in this context though, so oh well. It's looking to me like as long as the underlying hardware actually supports atomics, g++ handles this pretty reasonably. Now, let's take a closer look at the functions that were called earlier with the software atomic test...

Reading libistdc++ Source Code

I spent about 3 hours trying to reverse-engineer the C macros, and experienced more trauma than the time my imaginary girlfriend left me. I ended up just compiling libstdc++ on my system with source code and debug symbols enabled (Gentoo privilege), and then stepped through libstdc++ after compare_exchange_weak was called. Note that I'm currently using gcc 14.2.1, and libstdc++ and libatomic come bundled with gcc. You might see completely different things than I did if you go on this journey for yourself.

$ CXXFLAGS="-g" make$ gdb ./gemini-software-atomic...(gdb startup text)...(gdb) break compare_exchange_weakBreakpoint 1 at 0x1935: file /usr/lib/gcc/x86_64-pc-linux-gnu/14/include/g++-v14/atomic, line 342.(gdb) run...Thread 2 "gemini-software" hit Breakpoint 1 ...

Nice, it looks like we're on the right track. After using info threads and bt to look around a little bit, it looks like everything is as expected and we're in the right spot. Note that bt will show a bunch of stuff on the call stack above the thread function. That's stuff that was used to start the thread, so we can safely ignore all of that stuff because it's not relevant while the thread is running.

(gdb) bt#0 std::atomic::compare_exchange_weak (this=0x555555559160 <x>, __e=..., __i=..., __s=std::memory_order_relaxed, __f=std::memory_order_relaxed) at /usr/lib/gcc/x86_64-pc-linux-gnu/14/include/g++-v14/atomic:342 #1 0x00005555555552d7 in thread_function_1 () at gemini-software-atomic.cpp:24...(gdb) s...(gdb) (just press enter to repeat the last command "s" again)...libat_compare_exchange (n=24, mptr=0x555555559180 <y>, eptr=0x7ffff71f0da0, dptr=0x7ffff71f0d60, smodel=0, fmodel=0) at /usr/src/debug/sys-devel/gcc-14.2.1_p20250301/gcc-14-20250301/libatomic/gcas.c:81

After stepping through some asserts and memory alignment stuff, we finally end up in libat_compare_exchange. As far as I can tell, this is the software implementation for atomic compare exchange. After going through a couple more steps, we get to the code that actually does the locking:

libat_lock_n (ptr=ptr@entry=0x555555559180 <y>, n=n@entry=24) at /usr/src/debug/sys-devel/gcc-14.2.1_p20250301/gcc-14-20250301/libatomic/config/posix/lock.c:81

The current implementation of this function generates some kind of hash from the memory address, and then uses that hash to index an array of mutexes. If we want to have any chance at deadlocking C++ atomics, this is the place to do it. Fortunately, the code is quite straight forward and can be viewed easily on the gcc GitHub mirror. This locking function seems... a bit dubious, but let's take a closer look after we look at the rest of the implementation. However, we can note right away that this implementation doesn't seem like it can get itself stuck in a deadlock if your data is just too large, so that's cool at least.

Continuing on, memcmp and memcpy are called, and then the mutexes are released. It appears that with libstdc++'s implementation of atomics, there is no obvious way to deadlock.

Now back to the dubious locking code. Trying to explain the locking code would be quite verbose, so I'll leave it up to you to read through the code if you want to understand how it works.

But I will explain the quirks. Locking any data with software atomics has a chance to block any other thread from locking any data with software atomics, and on x86_64 systems that chance reaches 100% if the atomic data has a size of 4096 bytes or larger.

This has more than just performance implications though. One thread being able to block another thread that's not even trying to access the same memory is incredibly dubious. In fact...

Yes, You Can Deadlock With C++ Atomics

At least in libstdc++. I'll leave it up to you to figure out why this deadlocks, but it does, in fact, deadlock. If you compile and execute the code yourself, you may need to execute it a few times before it deadlocks, however you should most definiteley experience the deadlock in less than 100 runs unless you got unfathomably unlucky.

This implementation might only work with libstdc++, however deadlocking could most definitely be possible in other implementations. Also, I can't say for certain, but I assume that the atomics implementation for JS is more or less just a wrapper around the C++ implementation. But I can say for certain that the C implementation for atomics uses the same code as C++. Other programming languages that rely on the C/C++ atomics implementation are most definitely at risk of deadlocking if you try hard enough.

Final Thoughts

I do not fault the C++ people for wanting to make a universal abstraction for atomics, but this ain't it. The road to hell is paved with good intentions, and this is yet another fractal of bad design. The lesson here is that general abstractions must be exactly that, general. When designing general abstractions, you should be comfortable with abstracting away low level details for the sake of reducing complexity, but you also need to accept the fact that some things, especially unstandardized things like hardware, can never have a general abstraction. Sometimes, you just need to suck it up and write assembly, or vanilla JS, or use the git command line, or do any number of "hard" things.

As an industry, sometimes we need to fucking stop and embrace the hard way. Some things just can not be made easy, and if you're not willing to embrace the difficulty, then you will never make it in this industry. Work hard and grind it out.

Greatness is waiting for you.

AOP